SPEECH PRODUCTION

Work by Catherine Watson

Expressive facial-speech synthesis

Cathrine Watson is working on expressive speech synthesis, which involves buildng an expressive facial speech synthesis system for social or service robots. The aim of this is to deliver information clearly with empathetic speech and with an expressive virtual face. The work is built on two open source software packages, Festival (expressive speech synthesis) and Xface (expressive 3D talkiing head). Catherine addresses how to express different speech emotions with Festival, and how to integrate the syntehsized speeech with Xface. The project has been implemented in a physical robot and tested with some service scenarios.

Catherine Watson’s research areas are in speech generation in humans and machines, involving accent change, accent analysis, speech production, intonation modelling, speech synthesis and the applications of these in phonetics, robotics and speech language learning aids.

PUBLICATION

By her Ph.D. Students

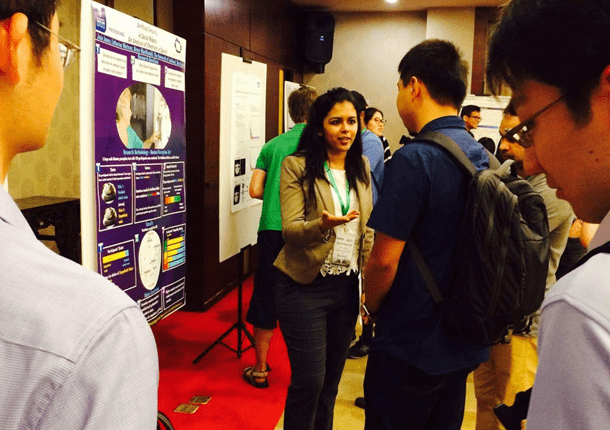

IEEE RO-MAN 2018, at Nanjing,

China, August 29-31 2018

An Open Source Emotional Speech Corpus for Human Robot Interaction Applications

For further understanding the wide array of emotions embedded in human speech, we are introducing a strictly-guided simulated emotional speech corpus. In contrast to existing speech corpora, this was constructed by maintaining an equal distribution of 4 long vowels in New Zealand English. This balance is to facilitate emotion related formant and glottal source feature comparison studies. Also, the corpus has 5 secondary emotions and 5 primary emotions. Secondary emotions are important in Human-Robot Interaction (HRI) to model natural conversations among humans and robots. But there are few existing speech resources to study these emotions, which has motivated the creation of this corpus. A large scale perception test with 120 participants showed that the corpus has approximately 70\% and 40\% accuracy in the correct classification of primary and secondary emotions respectively. The reasons behind the differences in perception accuracies of the two emotion types are further investigated. A preliminary prosodic analysis of corpus shows significant differences among the emotions. The corpus is made public at: github.com/tli725/JL-Corpus.

Interspeech 2018, Hyderabad, India

Artificial Empathy in Social Robots: An analysis of Emotions in Speech

Artificial speech developed using speech synthesizers has been used as the voice for robots in Human Robot Interaction (HRI). As humans anthropomorphize robots, an empathetically interacting robot is expected to increase the level of acceptance of social robots. Here, a human perception experiment evaluates whether human subjects perceive empathy in robot speech. For this experiment, empathy is expressed only by adding appropriate emotions to the words in speech. Also, humans’ preferences for a robot interacting with empathetic speech versus a standard robotic voice are also assessed. The results show that humans are able to perceive empathy and emotions in robot speech, and prefer it over the standard robotic voice. It is important for the emotions in empathetic speech to be consistent with the language content of what is being said, and with the human users’ emotional state. Analyzing emotions in empathetic speech using valence-arousal model has revealed the importance of secondary emotions in developing empathetically speaking social robots.